|

|

Ziyu Chen

Ph.D. Student in Computer Science

Email: ziyuchen@stanford.edu |

I am a first-year CS Ph.D. student at Stanford University, advised by Prof. Jiajun Wu. My research is generously supported by the Stanford Graduate Fellowship.

Previously, I worked with Prof. Yue Wang as a visiting student at the University of Southern California and collaborated closely with Prof. Marco Pavone from Stanford University. I received my Master’s degree under Prof. Li Song and my Bachelor’s from Shanghai Jiao Tong University.

Research Focus. My current research focuses on visual generative models, including methods and architectures that unify visual understanding and generation and make them more efficient, controllable, and aligned with human intentions, as well as their applications in physical-world modeling.

My past work focuses on neural 3D representations (NeRFs/SDF/3DGS), large-scale dynamic scene modeling, human body modeling, and closed-loop simulation and evaluation for embodied agents.

|

|

|

Mar

2025

Awarded the Stanford Graduate Fellowship 🎓

Feb

2025

OmniRe is accepted to ICLR 2025 as Spotlight 🌟 Shout out to all my collaborators!

OCT

2024

Talk at MIT Visual Computing Seminar, visited beautiful Boston! 🚣

AUG

2024

Released DriveStudio, a 3DGS system for driving reconstruction/simulation! 🚗

SEP

2023

Joined Geometry, Vision, and Learning Lab as a research intern, advised by Prof. Yue Wang!

|

|

|

|

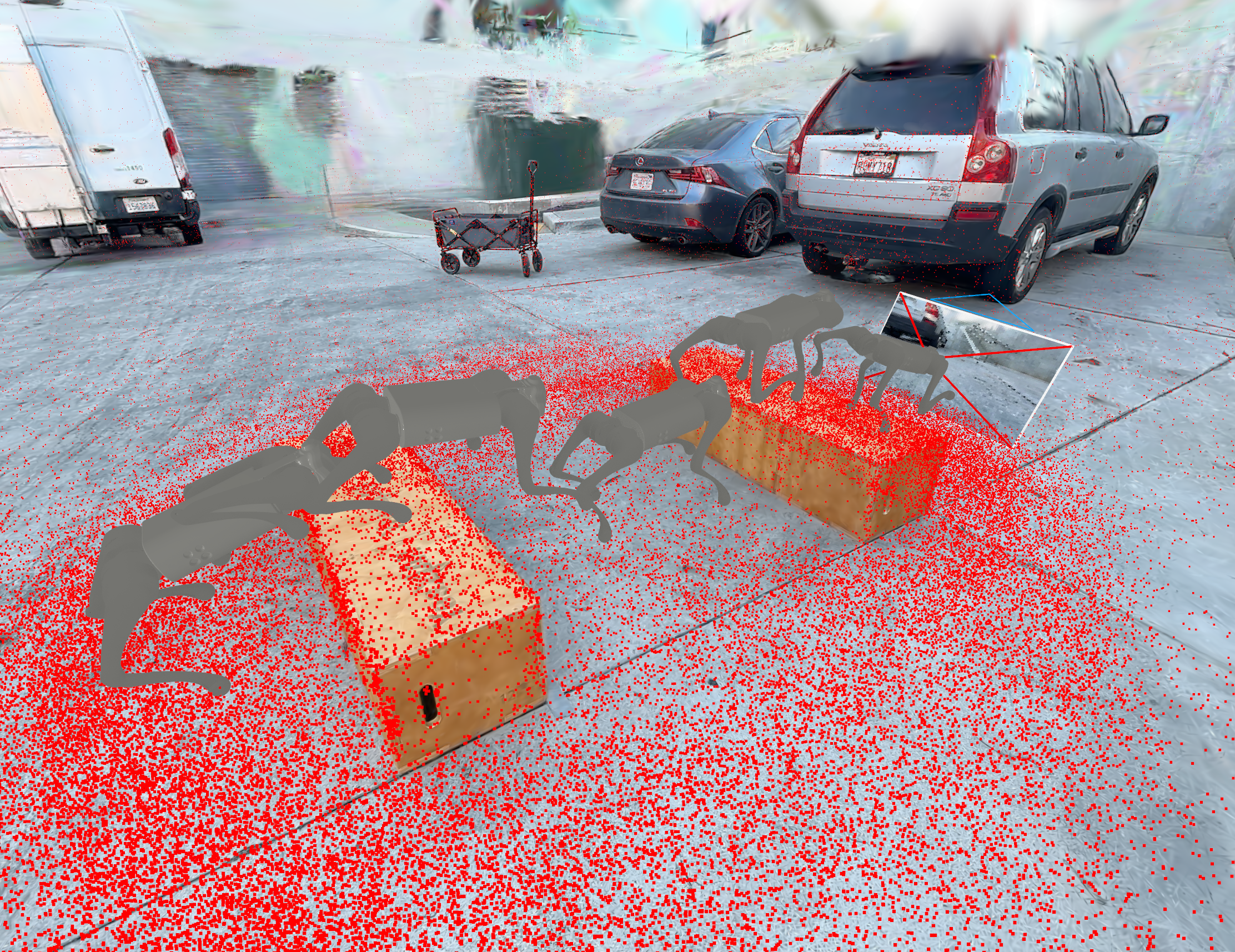

A toolchain and benchmark suite for hyper-realistic visual locomotion, building high-fidelity digital twins of real-world environments for closed-loop evaluation. |

|

|

OmniRe is a holistic framework for dynamic scene reconstruction that provides comprehensive coverage, including static backgrounds, driving vehicles, and non-rigidly moving dynamic actors. |

|

|

We proposed a diffusion-based pipeline that generates complete 360 panoramas using one or more unregistered NFoV images captured from arbitrary angles. This pipeline not only delivers superior quality panoramas but also offers a broader range of outcomes due to its text-conditioned generation capability. |

|

Our L-Tracing based reflectance factorization framework produces photo-realistic novel view images with nearly 10x speedup, compared with the same framework applying volumetric integration for light visibility estimation. |

|

|

|

|

|

OmniRe: Omni Urban Scene Reconstruction

|

|

|

|

Stanford Graduate Fellowship, Stanford, 2025 National Scholarship, Shanghai Jiao Tong University, 2023 Zhiyuan Honor Fellowship, Shanghai Jiao Tong University, 2018-2022 |